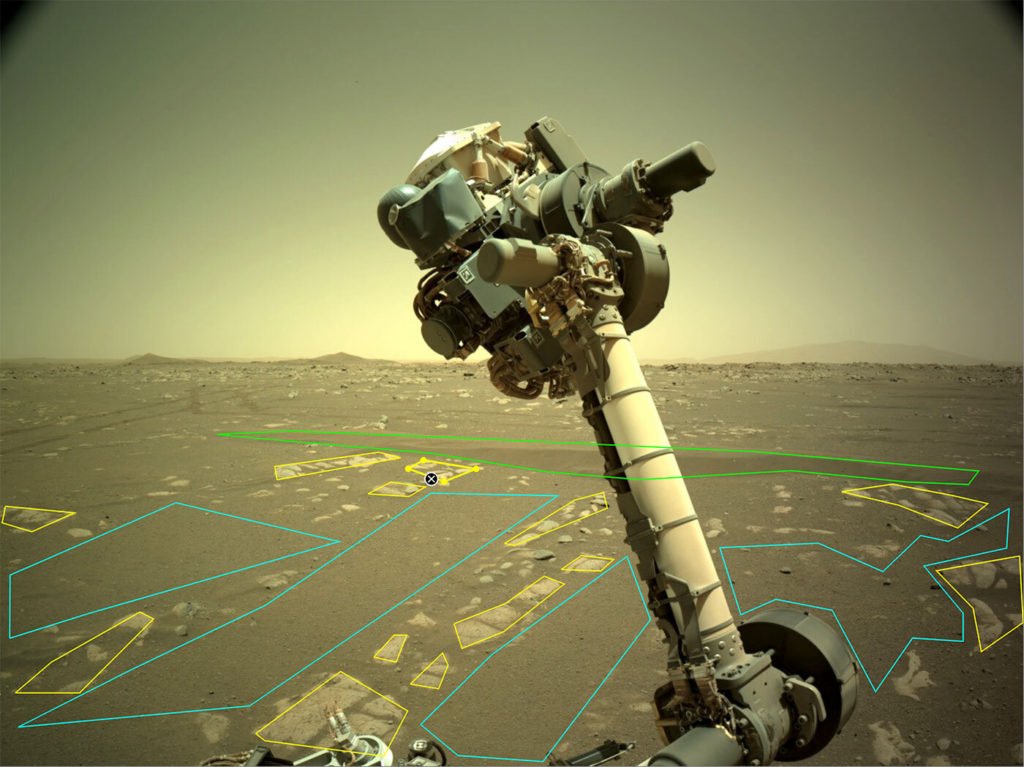

JPL and NASA need your help to identify objects on Mars so that the future Mars land rovers will have an easier time getting around.

The project is called AI4Mars, and already has more than 12,000 volunteers.

Researchers hope it can be sent to Mars aboard a future spacecraft that could perform even more autonomous driving than Perseverance’s current AutoNav technology allows.

Today, Perseverance’s 19 cameras send up to several hundred images to Earth each day for scientists and engineers to comb through for specific geological features. Team members only have a few hours to develop the next set of instructions for the rover.

“It’s not possible for any one scientist to look at all the downlinked images with scrutiny in such a short amount of time, every single day,” said Vivian Sun, a JPL scientist who helps coordinate Perseverance’s daily operations and consulted on the AI4Mars project, in a statement. “It would save us time if there was an algorithm that could say, ‘I think I saw rock veins or nodules over here,’ and then the science team can look at those areas with more detail.”

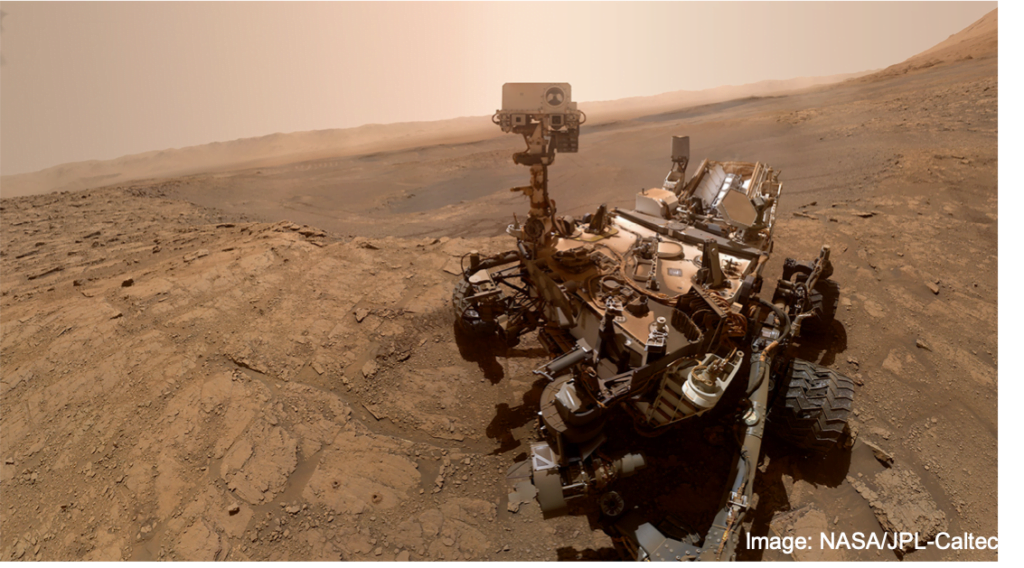

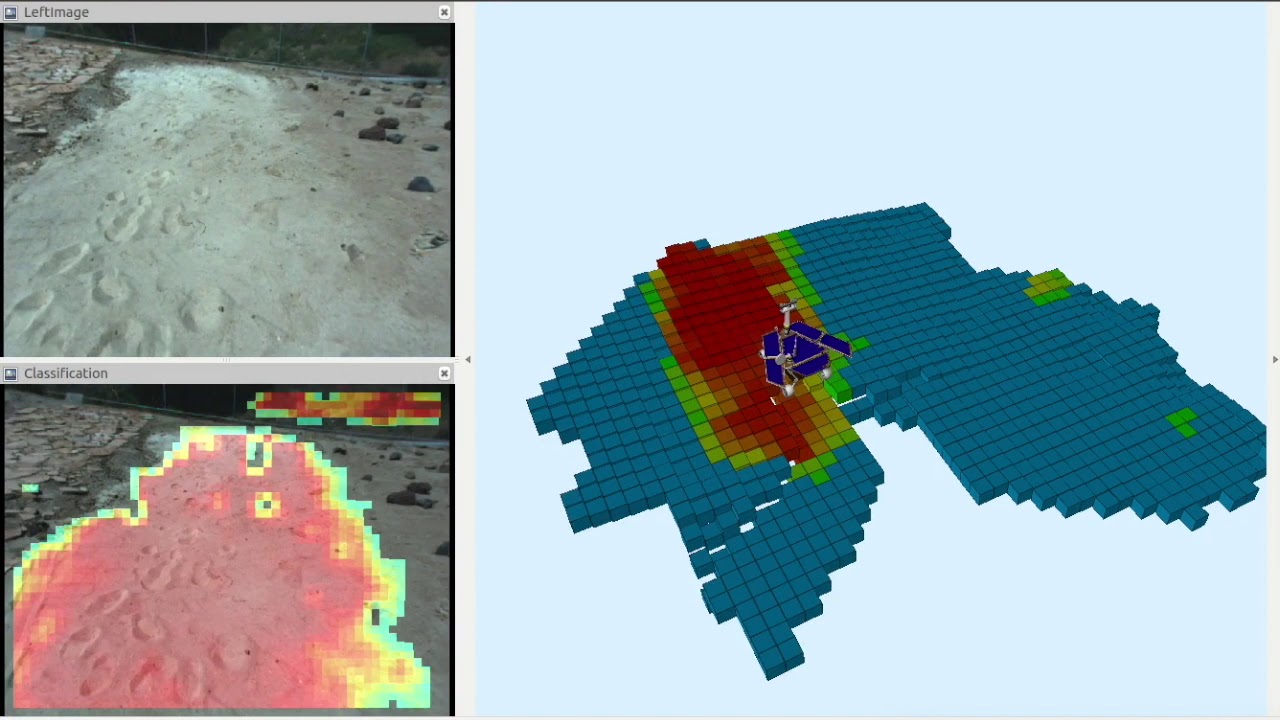

AI4Mars is a continuation of a project launched last year that relied on imagery from NASA’s Curiosity rover. Participants that project have already labeled nearly half a million images, using a tool to outline features like sand and rock. The end result was an algorithm that could identify these features correctly nearly 98 percent of the time.

This isn’t the first-time space research is requesting distributed computing over the Internet. SETI@home was released to the public on May 17, 1999 to analyze radio signals and help find signs of extraterrestrial intelligence.

You can watch a video about the AI system used to classify Mars terrain below:

Jim DeLillo writes about tech, science, and travel. He is also an adventure photographer specializing in transporting imagery and descriptive narrative. He lives in Cedarburg, WI with his wife, Judy. In addition to his work for MetaStellar, he also writes a weekly article for Telescope Live.