Artificial intelligence-generated art is everywhere.

Just scroll down your Instagram or Facebook feed, and you’ll see a bunch of selfies turned into magic avatars with Lensa, an AI image generator app launched in 2018 that is all the rage at the moment.

Images created by AI aren’t only found in your social media feed — they’ve already made their mark in OpenSim and other virtual environments.

From AI art galleries in OpenSim to AI-created fully immersive three-dimensional environments, AI is already shaping how we experience virtual worlds.

The rise of AI text-to-image generators

Nowadays, pretty much anyone can create AI-generated art thanks to AI text-to-image generators.

DALL-E 2, Stable Diffusion, and Midjourney are three popular AI generators that were released just this year, though there are more than ten available options at this point.

All three AI image generators can take simple text commands and create entire works of art.

The quality of the AI art varies depending on the text you put in and what you’re asking the AI to create.

Here’s a video comparing how the three image generators stack up against each other:

AI art created using these three tools can be found in all sorts of virtual settings.

You can visit this interactive virtual exhibition of Dall-E 2-created art on your computer or on a Meta Quest 2 for a more immersive experience.

It’s called the merzDALLEum and is run by German artist Merzmench.

If you’re on OpenSim, you can take a trip to an AI art museum called AI Dreams in Art.

AI Dreams in Art is a region in Kitely where you can admire and make copies of art created by Dale Innis using Midjourney.

The hypergrid address is grid.kitely.com:8002:AI Dreams in Art.

AI has reached a point of being so accessible that mass acceptance is unavoidable at this point, said virtual world hobbyist Han Held.

“AI is already being used by non-professionals with no AI background to enhance their interests and activities,” Held told Hypergrid Business.

Held used Stable Diffusion to create some AI-generated portraits that decorate her home in Second Life.

“The results feel more authentically like paintings than I could have made on my own,” she said.

The art that these AI image generators create doesn’t have to be just two-dimensional.

Here’s an example of how Midjourney can make a three-dimensional virtual reality image:

And here’s a three-dimensional image created on Stable Diffusion by Scottie Fox that you can actually move around in::

Images created using these new AI text-to-image generators aren’t only decorating our virtual worlds — they’re already becoming the virtual worlds we explore.

AI is creating virtual worlds

AI is increasingly being used to create detailed images for virtual worlds and other environments, said Matt Payne, CEO of machine learning consulting firm Width.ai.

“AI algorithms allow developers and designers to create realistic graphics that were once impossible to generate,” Payne told Hypergrid Business.

The AI-generated images are becoming more realistic and complex as the technology improves while offering advantages over manually created ones, said Payne. “AI can produce a large number of variations quickly, which makes it easier for developers and designers to choose the best image for their project.”

Software company Nvidia recently unveiled a new AI model called GET3D that can generate buildings, characters, vehicles, and all sorts of three-dimensional objects.

And GET3D works fast.

GET3D can generate about 20 objects per second using a single GPU, said NVIDIA’s Isha Salian in a blog post.

The name GET3D comes from the AI’s ability to generate explicit textured 3D meshes, which means that the shapes come in the form of a triangle mesh covered with a textured material. This way, users can import the objects into game engines, 3D modelers, and film renderers and easily edit them.

AI is also being used to transfer the artistic style of one image into another.

An AI algorithm can be trained on images with a specific artistic style, and that training can be used to apply the same style to other images, said Amey Dharwadker, machine learning engineer at Facebook.

“This can be used to create a wide variety of artistic styles and can be applied to 3D objects and environments in video games and virtual worlds,” Dharwadker told Hypergrid Business.

Here’s a video of a Google Stadia demo showing how the technology works in real time:

While Google Stadia recently shut down due to a lack of popularity, style transfer technology can still be used to change the artistic styles of virtual reality worlds and games without needing a human artist to do the work.

AI could help you create metaverse environments with just your voice

In the future, we could all be the artists that speak our virtual worlds into existence.

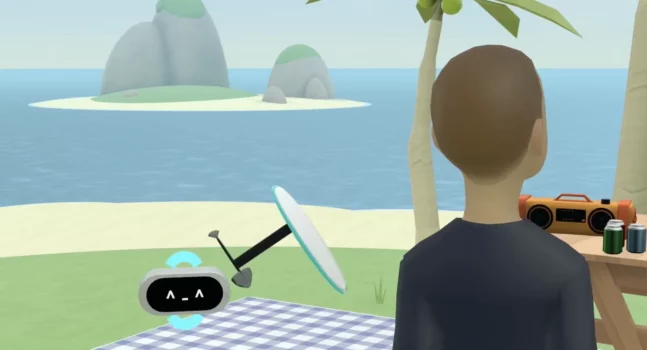

Back in February, Meta’s Mark Zuckerberg demonstrated the company’s Builder Bot, an AI tool that lets you change your virtual surroundings in the metaverse with just your voice.

“It enables you to describe a world, and then it will generate aspects of that world for you,” Zuckerberg said in the demo.

Zuckerberg and another Meta employee used voice commands in the demo to create a simple beach scene that had clouds, a picnic table, and Zuckerberg’s hydrofoil.

The technology still has a long way to go.

In the Meta demo, the scene looked low-res and lacked detail.

The future of AI images in virtual worlds

As AI continues to mature, it could eventually generate lifelike environments that we can’t distinguish from reality.

One possible direction is through the use of generative adversarial networks, or GANs, said Facebook’s Dharwadker.

GANs are a type of AI model consisting of two neural networks that are trained together to produce realistic images, said Dharwadker.

“GANs have shown great potential for generating high-quality images and could be used to create more realistic and immersive environments,” he said.

AI could also be used to support human creativity, Dharwadker added. “This could include using AI to suggest ideas and concepts or to refine and enhance existing human artist designs.”

In the future, not only could AI create totally realistic virtual environments for us to explore, it could be working hand in hand with humans to create the images we find in virtual worlds.

“By combining the creativity and ingenuity of humans with the computational power of AI, it is possible to create even more impressive and engaging images for video games and virtual worlds,” said Dharwadker.

MetaStellar news editor Alex Korolov is also a freelance technology writer who covers AI, cybersecurity, and enterprise virtual reality. His stories have also been published at CIO magazine, Network World, Data Center Knowledge, and Hypergrid Business. Find him on Twitter at @KorolovAlex and on LinkedIn at Alex Korolov.